set.seed(12345)

df = tibble(design_a = rnorm(50, 6.2, 0.5),

design_b = rnorm(50, 7.0, 0.5),

design_c = rnorm(50, 6.5, 0.5)) |>

pivot_longer(cols = everything(), names_to = "design", values_to = "percentage")

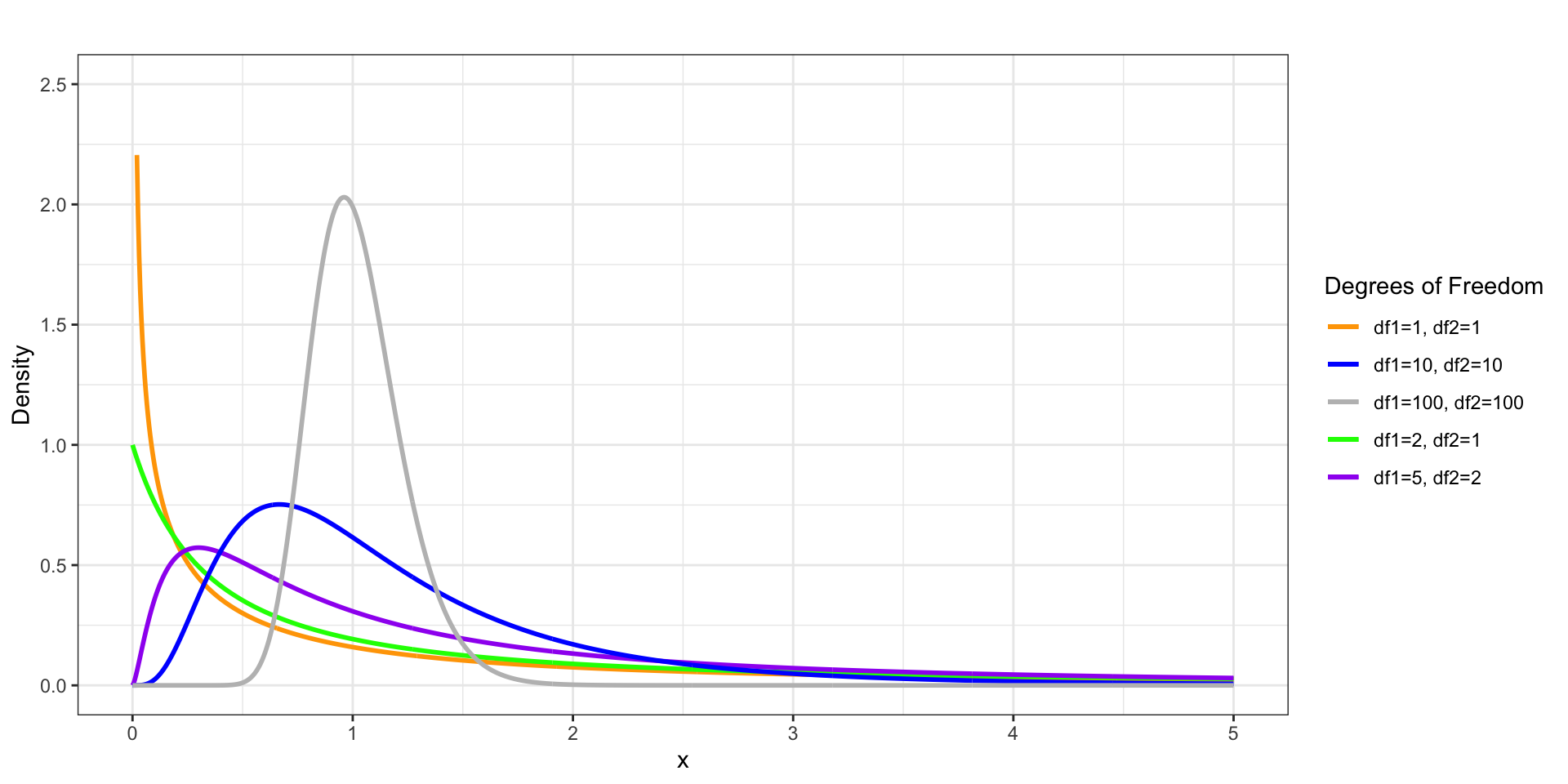

result = aov(percentage ~ design, data = df)

summary(result) Df Sum Sq Mean Sq F value Pr(>F)

design 2 20.48 10.241 32.62 1.89e-12 ***

Residuals 147 46.15 0.314

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1